TL;DR: We introduce Any3DIS, a novel class-agnostic approach for 3D instance segmentation that leverages 2D mask tracking to segment 3D objects in point cloud scenes.

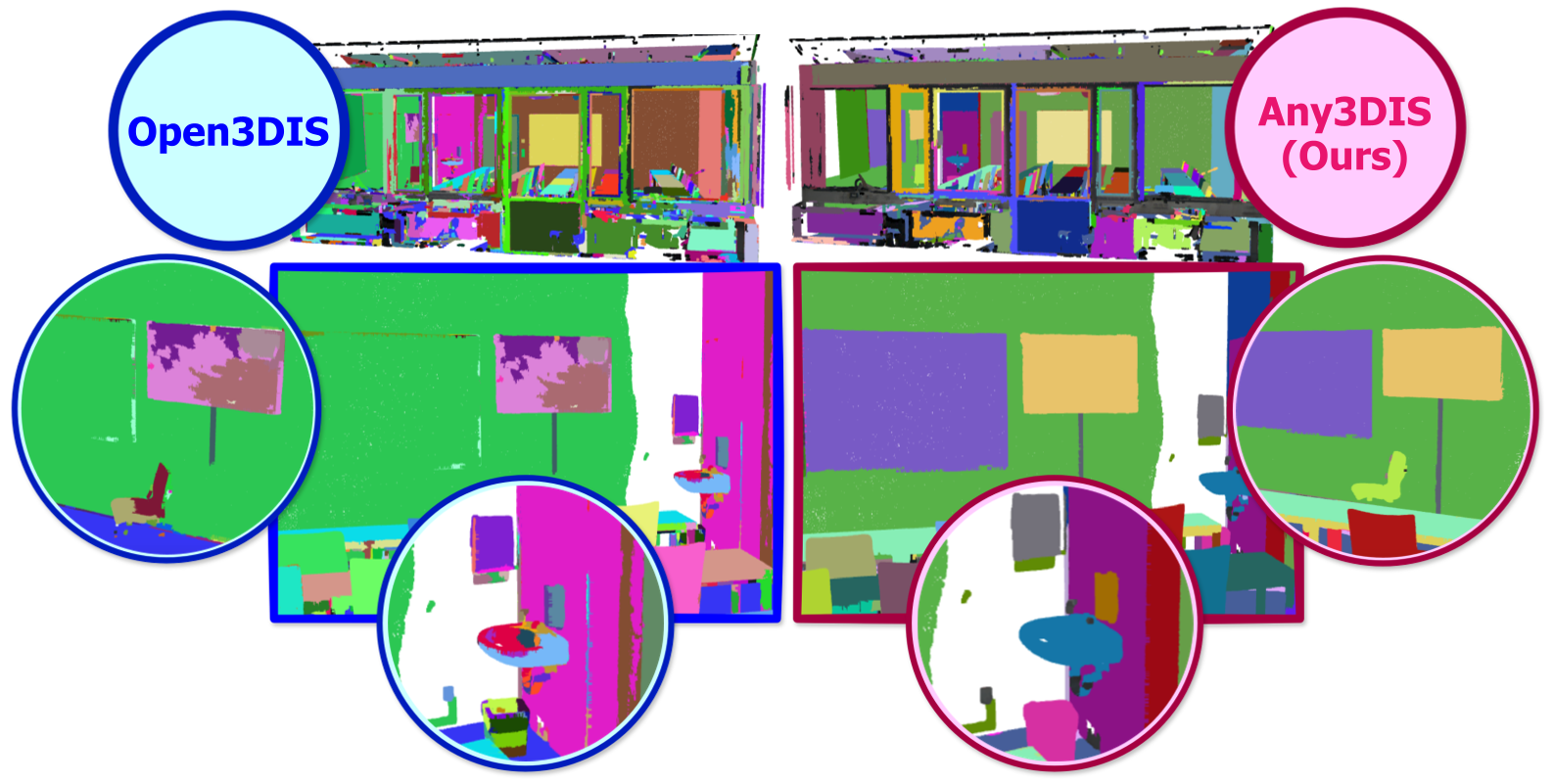

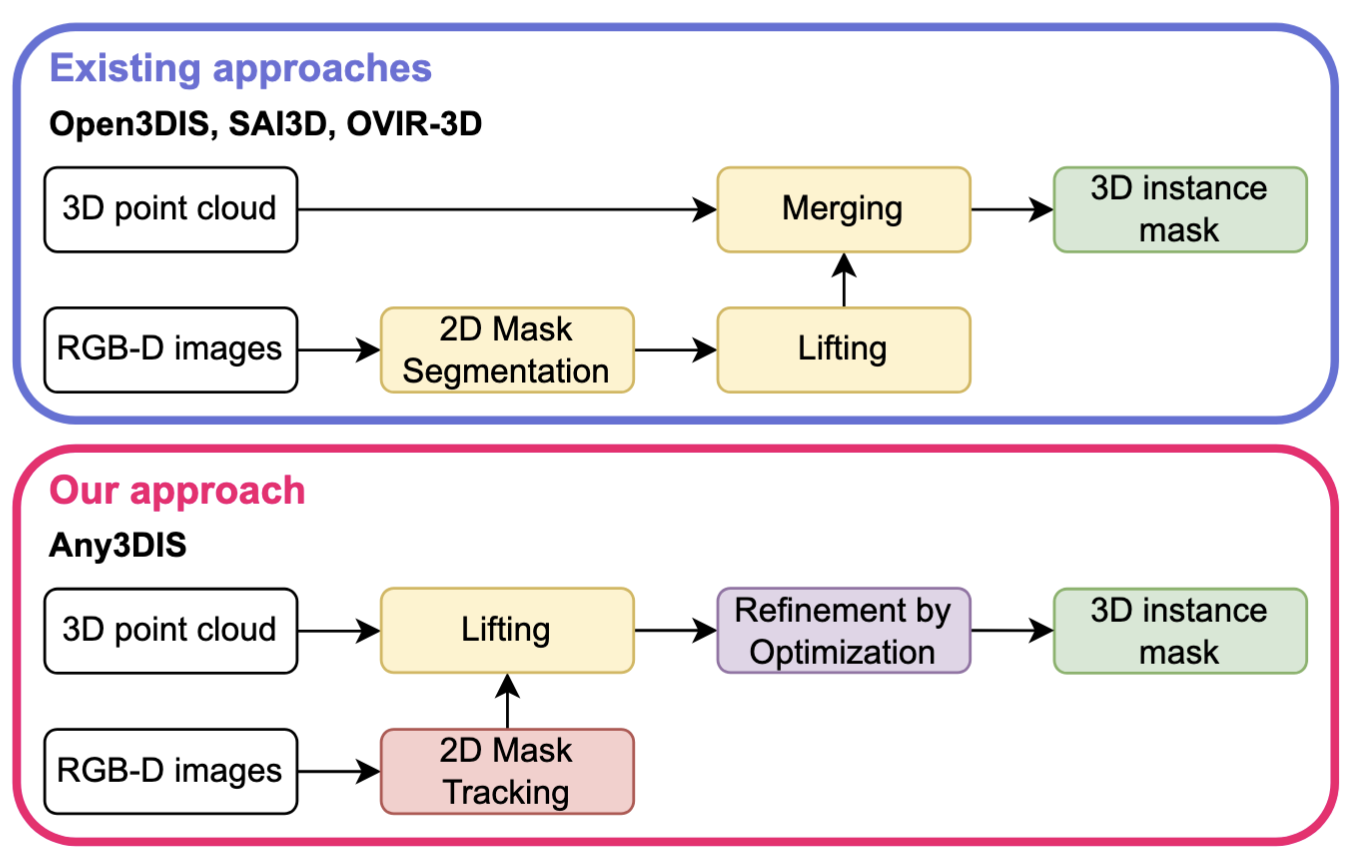

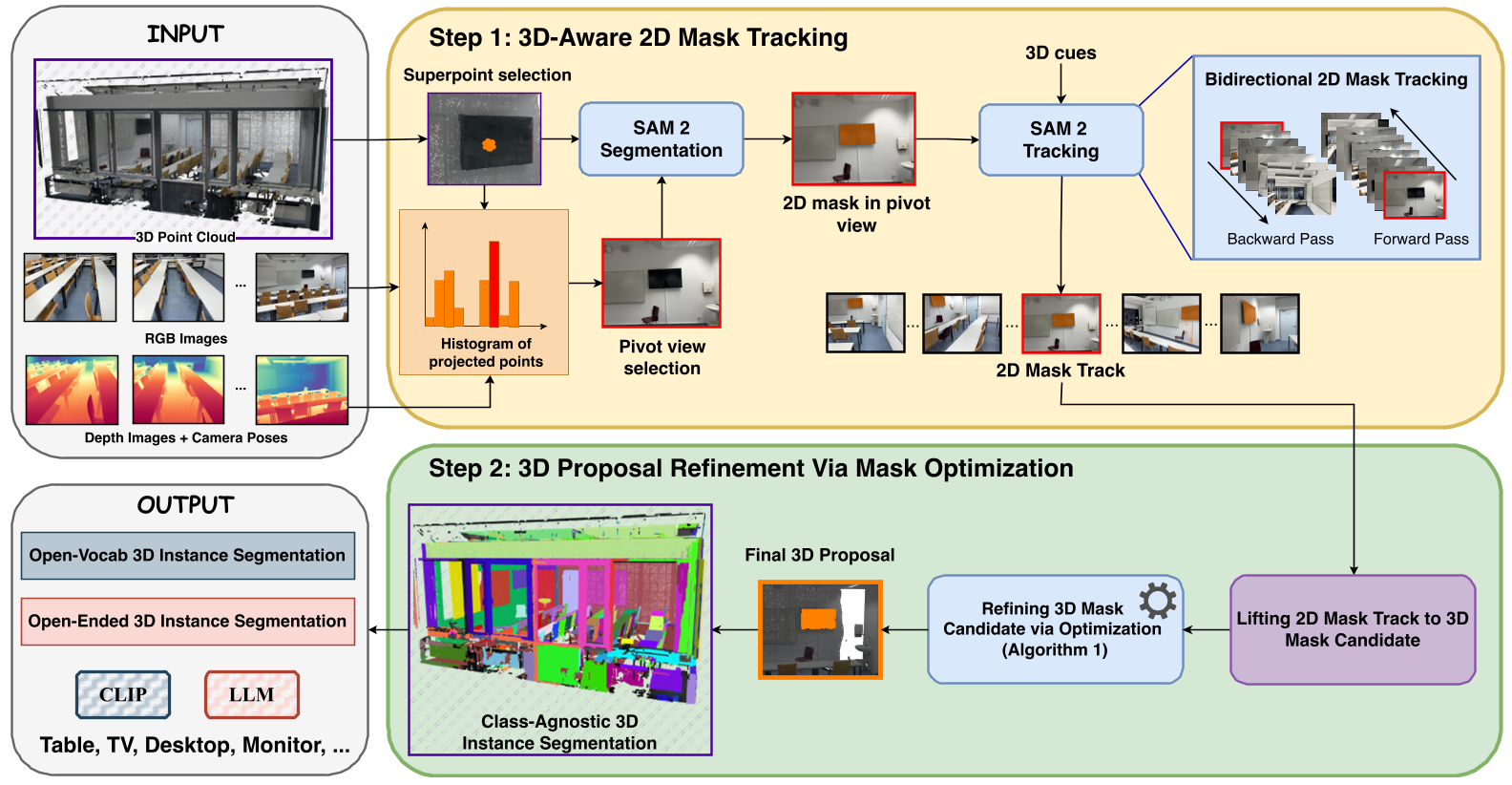

Existing 3D instance segmentation methods frequently encounter issues with over-segmentation, leading to redundant and inaccurate 3D proposals that complicate downstream tasks. This challenge arises from their unsupervised merging approach, where dense 2D instance masks are lifted across frames into point clouds to form 3D candidate proposals without direct supervision. These candidates are then hierarchically merged based on heuristic criteria, often resulting in numerous redundant segments that fail to combine into precise 3D proposals. To overcome these limitations, we propose a 3D-Aware 2D Mask Tracking module that uses robust 3D priors from a 2D mask segmentation and tracking foundation model (SAM-2) to ensure consistent object masks across video frames. Rather than merging all visible superpoints across views to create a 3D mask, our 3D Mask Optimization module leverages a dynamic programming algorithm to select an optimal set of views, refining the superpoints to produce a final 3D proposal for each object. Our approach achieves comprehensive object coverage within the scene while reducing unnecessary proposals, which could otherwise impair downstream applications. Evaluations on ScanNet200 and ScanNet++ confirm the effectiveness of our method, with improvements across Class-Agnostic, Open-Vocabulary, and Open-Ended 3D Instance Segmentation tasks.

Difference between Open3DIS and our Any3DIS. Open3DIS segments all 2D masks across views first, then lifts them to 3D proposals for merging, whereas our approach tracks all 2D masks across views, lifts them directly to 3D proposals, and then refines these proposals through optimization.

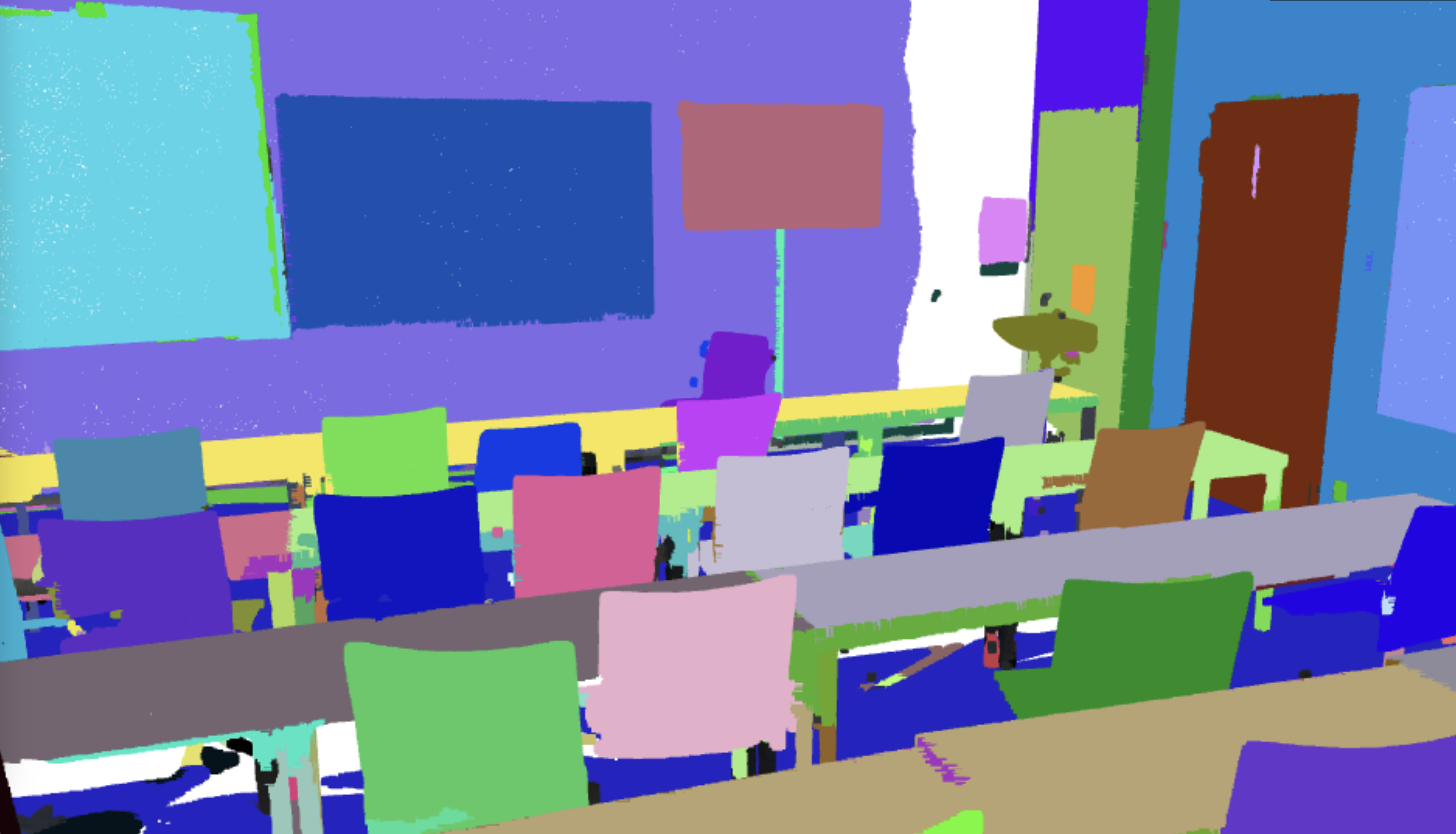

We propose a novel class-agnostic approach for 3D instance segmentation through 2D mask tracking. Specifically, we identify each selected superpoint's pivot view within the RGB-D frame sequence, where it is most visible. Using the SAM 2 model, we then obtain the 2D segmentation of this superpoint in the pivot view and track this 2D segmentation across other views. For each 2D mask obtained, we generate a 3D mask candidate by aggregating all 3D superpoints whose projections intersect with any of the 2D masks. These superpoints are then subjected to mask optimization to produce the final refined 3D mask proposal, which is added to the class-agnostic 3D instance segmentation bank, ready for downstream tasks.

@article{nguyen2024any3dis,

title={Any3DIS: Class-Agnostic 3D Instance Segmentation by 2D Mask Tracking},

author={Nguyen, Phuc and Luu, Minh and Tran, Anh and Pham, Cuong and Nguyen, Khoi},

journal={arXiv preprint arXiv:2411.16183},

year={2024}

}